Impact of educational instruction on medical student performance in simulation patient

Logan D. Glosser1, Conner V. Lombardi1, Wade A. Hopper2, Yixing Chen3, Alexander N. Young1, Elliott Oberneder3, Sprio Veria1, Benjamin A. Talbot1, Shirley M. Bodi4 and Coral D. Matus4

1Department of Medical Education, University of Toledo College of Medicine and Life Sciences, USA

2Department of Medical Education, Edward Via College of Osteopathic Medicine, USA

3Lloyd A. Jacobs Interprofessional Immersive Simulation Center, USA

4Department of Family Medicine University of Toledo College of Medicine and Life Sciences, USA

Submitted: 13/02/2022; Accepted: 12/06/2022; Published: 23/06/2022

Int J Med Educ. 2022; 13:158-170; doi: 10.5116/ijme.62a5.96bf

© 2022 Logan D. Glosser et al. This is an Open Access article distributed under the terms of the Creative Commons Attribution License which permits unrestricted use of work provided the original work is properly cited. http://creativecommons.org/licenses/by/3.0

Abstract

Objectives: This study aimed to evaluate the effects, and timing of, a video educational intervention on medical student performance in manikin-based simulation patient encounters.

Methods: This prospective mixed-methods study was conducted as part of the University of Toledo College of Medicine and Life Sciences undergraduate medical curriculum. One hundred sixty-six students second-year students participated in two simulations on a single day in September 2021. A 7-minute video intervention outlining the clinical diagnostic approach to pulmonary complaints was implemented. Students were randomized into 32 groups which were divided into two cohorts. One received the video prior to simulation-1 (n=83) and the other between simulation-1 and simulation-2 (n=83). Each simulation was recorded and assessed using a 44-point standardized checklist. Comparative analysis to determine differences in performance scores was performed using independent t-tests and paired t-tests.

Results: Independent t-tests revealed the video-prior cohort performed better in simulation-1 (t(30)= 2.27, p= .03), however in simulation-2 no significant difference was observed between the cohorts. Paired t-test analysis revealed the video-between cohort had significant improvement from simulation-1 to simulation-2 (t(15)= 3.06, p = .01); no significant difference was found for the video-prior cohort. Less prompting was seen in simulation-2 among both the video-prior (t(15)= –2.83, p= .01) and video-between cohorts (t(15)= –2.18, p= .04).

Conclusions: Simulation training, and targeted educational interventions, facilitate medical students to become clinically competent practitioners. Our findings indicate that guided video instruction advances students' clinical performance greater than learning through simulation alone. To confirm these findings, similar investigations in other clinical training exercises should be considered.

Introduction

Healthcare is a profession that is continually evolving and, along with it, medical student education. As disease patterns, diagnostic tools, and the healthcare landscape have changed, so too have the current medical curricula leading to models that focus on achieving various competencies to assess the readiness of future physicians.1 One such change has been an increasing utilization of manikin-based simulation patient encounters (SPEs) to facilitate medical student acquisition of clinical skills.2

Despite sophisticated curriculum design and complex teaching methods utilized in medical student education, disparities exist among newly graduated physicians and their ability to effectively transfer basic science knowledge to clinical practice3,4 There are many challenges facing medical educators in helping students attain autonomous learning and critical thinking skills.5,6 Critical thinking skills allow medical students to triage clinical scenarios, respond promptly, and make reasonable clinical decisions to provide quality patient care.6 With modern technology rapidly integrating into health care, it can feel as though doctors are akin to a computer, expected to analyze an immense amount of data to identify symptoms, diagnose disease, and compute the appropriate management.7

To develop these skills, medical education has become largely focused on the vertical integration (VI) method, defined as the gradual transition from the classroom to clinical environments.8,9 The VI method bridges students from acquiring knowledge to implementation and critical thinking in clinical practice.8,9 This method has demonstrated increased learning retention, although difficult to achieve.10 Moreover, there are numerous strategies available for educators to facilitate this transformation from classroom learning to application in practice. These strategies include the use of standardized patients, exposure to patient care through observation, and increasingly manikin-based SPEs.11-13 These strategies are typically used in combination, such that knowledge acquisition occurs with concepts presented over time and occur in different learning environments.8,10

Although the use of manikin-based SPEs have been adopted by most medical schools in the United States, there is significant heterogeneity by which such exercises are conducted and evaluated. Currently, no standardized guidelines exist for designing simulation experiences to optimize medical student learning.14,15 It is unknown whether guided educational instruction impacts medical student simulation performance and learning. However, prior evidence suggests that medical students value such clinical simulations and see them as a helpful learning modality.16-19

Medical education, and the curriculum that guides it, require continual evaluation to adapt to change.20 This includes a rigorous evaluation of simulation-based education especially given the advances and the adoption of this type of learning. As such, the objective of this study was to determine the impact, and timing of, an educational video on medical student performance in manikin-based simulation. The main research questions were:

· Does peri-simulation education improve medical student clinical performance during the encounter?

· Does the timing of such education alter medical student performance, and learning, in simulation patient encounters?

· How do students perceive simulation training, and peri-simulation education, to impact their clinical abilities?

Methods

Study design and participants

All second-year medical students attending the University of Toledo College of Medicine and Life Sciences were eligible to participate in the study. The trial was explained in detail to the students, and they were assured of the anonymity and confidentiality of personal information for all responses. The University of Toledo Institutional Review Board gave approval for the study. A total of 176 medical students were eligible to participate. Ten students were unable to participate due to infection with COVID-19, leaving a total of 166 students who participated in the SPE's.

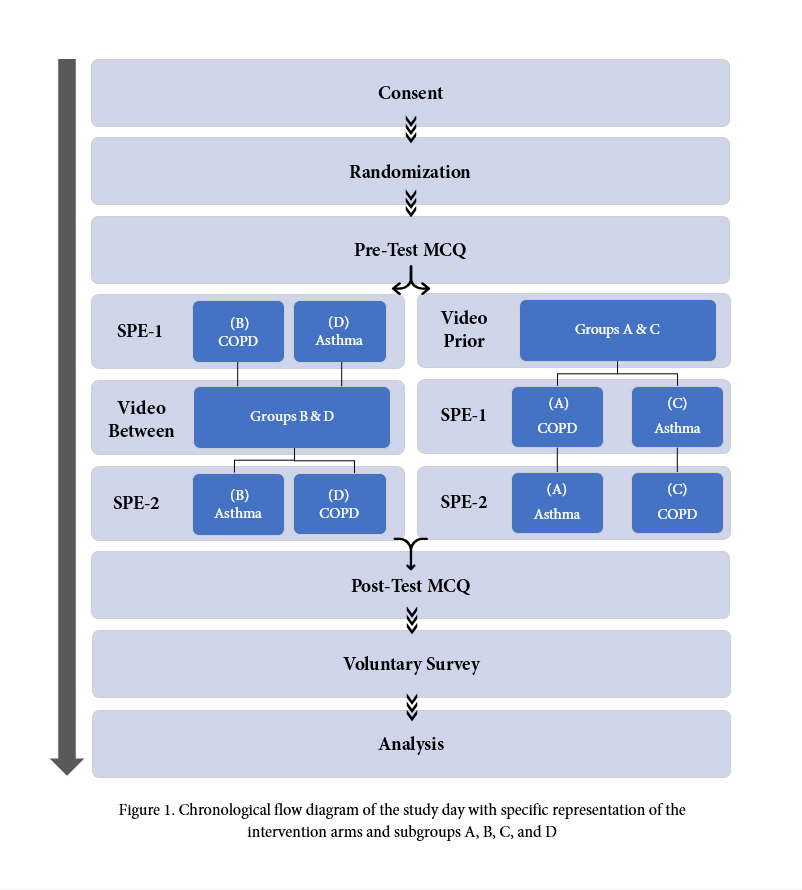

We undertook a mixed-methods study to determine the impact of a peri-simulation educational video on student performance and learning in two simulations. A cross-over type design for the video intervention was implemented, in which students either received the video before the first SPE (SPE-1) or the video between SPE-1 and the second SPE (SPE-2). A pre-test multiple-choice questionnaire (MCQ) was administered prior to SPE-1, and a post-test MCQ was administered after SPE-2, followed by a voluntary feedback survey and a debriefing of the experience with a faculty physician. The SPE scenarios encompassed a patient with asthma and a patient with chronic obstructive pulmonary disease (COPD).

A two-stage randomization strategy was implemented, as shown in Figure 1. First, students were randomized into 32 groups of approximately equal size (5–6 students each) by faculty independent of the research team. Assignment of students and the randomization process was concealed from the students and the research team. The 32 groups were then randomized into four subgroups (A, B, C, D). The four subgroups (A, B, C, and D) had a pre-randomization of the location of the simulation room and the SPE scenario.

Subgroups A and C both received video interventions before the first simulation scenarios and rotated thru the same environment: room, medical manikin, faculty, and simulation operator. Subgroup A was given the COPD simulation scenario first, followed by the asthma scenario and subgroup C was given the asthma simulation scenario first, followed by the COPD scenario. Subgroups B and D both received video interventions between the simulation scenarios and rotated thru the same environment: room, medical manikin, faculty, and simulation operator. Subgroup B was given the COPD simulation scenario first, followed by the asthma scenario and subgroup D was given the asthma simulation scenario first, followed by the COPD scenario.

There was a total of four operators running the SPE environments. The operators ran the same case in the same room and manakin for the duration of the study. The operators were given a standardized training session for their respective cases to keep the language and overall atmosphere the same for each group.

Simulation scenarios

The two SPE scenarios and the information the students were expected to obtain were developed by faculty, independent of the research team, as part of the standard undergraduate medical education curriculum. The SPE's were a formative, non-graded group activity designed as an opportunity for medical student learning.

The students were given the following information on a display screen prior to starting the SPE: "You will have 15 minutes to conduct a focused history and physical exam. You will present the case afterwards as you will do on your clerkship rotations."

The COPD case represented a 61-year-old male, one pack/day smoker since he was 15 years old, with worsening dyspnea over the past two to three days, acutely worse the morning of the presentation. Relevant high-yield objective information given to the students when prompted included a temperature of 37.1 °C, heart rate of 124 beats per min, respiratory rate of 28 breaths per min, blood pressure 149/94, pulse oximetry oxygen saturation of 84%, forced expiratory volume in one sec to force vital capacity ratio 60% of expected, brain natriuretic peptide level of 88 pg/mL, initial arterial blood gas pH of 7.3, pCO2 of 55 mmHg, pO2 of 55 mmHg, bicarbonate of 26 mmHg, and imaging consistent with acute COPD exacerbation.

The asthma case represented a 10-year-old boy with dyspnea after playing outside. Students were notified that he was visibly distressed in the waiting room, where he was bent over with his hands on his knees. Relevant high-yield objective information given to the students when prompted included a temperature of 37 °C, blood pressure of 104/68 mmHg, heart rate of 110 beats per min, respiratory rate of 30 breaths per min, pulse oximetry oxygen saturation of 84%, forced expiratory volume in one sec to force vital capacity ratio 65% of expected, pulmonary function test after beta-agonist therapy 85% of expected, initial arterial blood gas pH of 7.37, pCO2 of 55 mmHg, pO2 of 65 mmHg, bicarbonate of 22 mmHg, and imaging consistent with acute asthma exacerbation.

Instruments

Simulation performance scores were calculated using separate standardized checklists for the COPD and asthma scenarios, respectively, as shown in Table 1. The checklists were modified versions of those used for the graded objective structured clinical examinations performed as part of the standard undergraduate medical education curriculum.

Two faculty physicians performed checklist modifications for the SPEs. It encompassed key aspects of the history of presenting illness (HPI), physical exam, assessment/plan/interventions (A/P/I), and the number of times the facilitator had to prompt students to keep the sim moving forward. There was a total of 44 checklist items for SPEs, with each checklist item worth 1 point.

Individual baseline student knowledge was assessed by completing a multiple-choice question (MCQ) pre-test examination before SPE-1, as shown in Appendix 1.At the conclusion of SPE-2, students were re-assessed using a post-test MCQ, which consisted of the same ten questions and answers (ranging from two to five possible answers) as the pre-test. The MCQ points per question were weighted based on difficulty as pre-assigned by a faculty physician on the study personnel. There were three questions weighted to be worth 2 points, one question worth 3 points, and the remaining six questions each worth 1 point, totaling a maximum of 15 points available. The MCQ examination was intended to measure student learning separate from the dimension of clinical performance. Expert physicians verified the content validity of the MCQ. However, no statistical certification of MCQ reliability was performed.

Students had the option to voluntarily complete an anonymous 5-point Likert Scale feedback questionnaire at the conclusion of the MCQ post-test. The questionnaire was composed of three questions intended to gather students' perspectives on the impact of the intervention, debriefing, and overall simulation training experience on their learning.

Data collection methods

Two blinded independent evaluators viewed video recordings of the SPEs. Both reviewers were 4th-year medical students who were trained independently by a faculty to ensure the accuracy of the assessment. The reviewers were blinded from the intervention arms of the groups were a part of, and they were blinded from whether the group was in SPE-1 or SPE-2. The evaluators recorded whether the students performed the checklist items for the respective Asthma and COPD scenarios. A third independent reviewer compiled the two reviewers' checklists and found no instances in which the independent reviewers differed in their scoring. The MCQ pre-and post-tests, along with the feedback questionnaire, were performed using an online platform which recorded each student's responses. Faculty placed the students' scores into their respective groups such that individual students' scores remained deidentified, and performance scores could not be traced back to the individual name of the student.

Statistical analysis

The primary objective was to compare the performance effect as measured by an adapted analysis of student performance in a group setting of simulated patient encounters. The hypothesis that the Intervention = 0 was tested by means of student paired t-tests and independent t-tests. The scores were expressed as means plus or minus standard deviations (SD). The mean and SD of the within-group difference captures the treatment effect and the paired nature of the design; these were used as the basis for constructing the difference between the means confidence intervals and hypothesis testing. This analysis approach made two main assumptions (in addition to the normality assumption for the student's paired t-test): no period effect and no intervention-period interaction. Secondary analysis was performed to compare the sub-categorization of the scoring checklist, compare the multiple-choice questionnaire pre-test and post-test, and compare the COPD versus asthma performance scores from SPE-1 and SPE-2. All statistical tests were two-sided, and a p-value ≤ .05 indicated statistical significance. All statistical tests were performed using Microsoft Excel.

Results

One hundred sixty-six students participated in the study. A total of 64 SPEs were assessed (two SPEs for each of the 32 groups). The separate intervention arms' mean simulation totals and sub-categorical scores of SPE-1 and SPE-2 are shown in Table 2. A comparison of the video prior versus the video between total and sub-categorical scores in SPE-1 and SPE-2 are shown in Table 3.

A significant difference was observed when comparing the mean performance scores the two intervention arms in SPE-1, with the video prior cohort performing better with a mean of 23.81 (SD= 3.41) versus 21.06 (SD= 3.41) on independent t-test (t(30)= 2.27, p = .029). The video prior cohort scored higher in the HPI subcategory of SPE-1 with a mean of 11.25 (SD= 2.72) versus 9.31 (SD= 2.15) among the video between cohort (t(30)= 2.23, p = .033). The video prior cohort had a lower mean number of times prompting in SPE-1 of 2.06 (SD= 0.93) compared to 3.68 (SD= 2.08) among the video between (t(30)= –2.84, p = .007).

In SPE-2 no significant difference was found between the total performance scores. There was a significant difference between the number of times prompted, with the prior video cohort requiring a lower number of times prompted with a mean of 1.06 (SD= 0.77) versus 2.31 (SD= 1.25) for the video between cohort (t(30)= –3.40, p = .001).

No significant difference among the video prior cohort was observed when analyzing the scores using paired t-tests of SPE-1 vs SPE-2 (t(15)= –1.18, p = .257). A significant difference was seen between the SPE-1 and SPE-2 scores of the video between cohort (t(15)= 3.06, p = .008), mainly attributed to a significant difference in the HPI sub-categorical score (t(15)= 4.50, p =.001). A significant difference was seen in the number of times prompted for both the video prior and the video between cohorts (respectively t(15)= –2.83, p = .01, and t(15)= –2.18, p = .04).

To ensure a true difference was observed, a sub-analysis of the scenarios was performed. Independent t-tests showed no significant difference between the SPE-1 scores when analyzing asthma vs COPD scenarios nor the SPE-2 scores of asthma vs COPD scenarios (Appendix 2). Additionally, no significant differences were shown between the SPE-1 and SPE-2 scores of the asthma scenario alone or the COPD scenario alone.

For the sub-analysis of the MCQ performance results, the distribution of measured baseline variables was balanced between the two intervention arms. The mean MCQ pre-and post-test performance scores are shown in Table 4. A significant difference was found when assessing the MCQ pre-and post-test scores of the total cohort, with a mean MCQ pre-test score of 11.33 (SD= 0.93) to a mean of 13.74 (SD= 0.67) on the post-test (t(31)= 13.23, p = .001). This difference was seen when separately assessing the video prior and video between cohorts mean pre-and post-test MCQ scores (respectively t(15)= 10.14, p = .001, and t(15)= 8.44, p = .001).

One hundred and twenty-three students completed the voluntary student feedback questionnaire. The majority of those who completed the survey (75%) of students indicated that they either agreed or strongly agreed that the educational intervention was beneficial to their learning. Contrarily, only a minimal (5%) of students indicated that they did not find the intervention to be beneficial. The three-question student feedback questionnaire using a 5-point Likert Scale, and the responses given are shown in Appendix 3.

Discussion

Simulated Patient Encounters are becoming a mainstay of the medical school curriculum as medical educators continue to recognize the benefit of risk-free clinical skills practice that simulated clinical scenarios offer, especially to medical students in their pre-clinical years.21 The process of learning, especially for medical students, goes beyond memory recall as students need to learn how to demonstrate both competencies of knowledge and trust-worthy empathetic connections with patients.22 Physician empathy is not only a foundation of the physician-patient relationship, it also increases patient satisfaction,23 adherence to medical therapy,24 and improves patient outcomes.25 SPEs allow medical students the ability to practice their clinical skills, history and physical examinations, and medical knowledge in a simulated environment where they are encouraged to try and fail before they enter the clinical environment to work with real patients. Although several studies have investigated the effectiveness of SPEs in medical education, limited information exists on the impact and timing of guided peri-simulation education on student performance. In this study, our team investigated how a video educational intervention administered either before or between simulations affected student performance in respiratory SPEs.

Impact of peri-simulation education

The cohort that had the educational intervention between SPE-1 and SPE-2 had a significant improvement in their performance in SPE-2. On further analysis, this improvement was mostly attributable to higher accuracy in the HPI subcategory. The improvement among the students with the educational intervention was most likely due to the intervention itself, as the analysis of all groups showed no significant difference between the performance on SPE-1 and SPE-2 (Table 2). When comparing the performance scores across the two SPE's for those who received the video intervention prior to SPE-1, there was no significant difference. This suggests the intervention had made the impact already, and that impact was retained in SPE-2. Furthermore, this may indicate that the score improvement from the video intervention between cohort was not due to repeated exposure and practice.

The performance of the students in the SPEs showed the educational intervention had a positive impact on student performance. The students with the educational intervention prior to SPE-1 scored higher than those without the intervention, and although the educational intervention between-group scored higher on SPE-2, there was not a statistically significant difference between the intervention arms. This suggests that the intervention prior to the SPE-1 had a positive impact on student performance across both SPE-1 and SPE-2. When assessing the group performance by comparing the scores from COPD vs asthma, there was no significant result, suggesting that the cases themselves did not impact the group performance scoring assessment.

Both cohorts had a significant reduction in the number of times they needed to be prompted to continue progressing through the simulated patient encounters, which is most likely attributable to the experience of the simulation itself rather than the educational intervention. As previously mentioned, patient simulation is an experience where students are encouraged to try and fail. It often takes students an initial period to familiarize themselves with the simulation environment and be comfortable enough to progress through various portions of the simulation assertively. Thus, it is not surprising that students needed to be prompted less in SPE-2 as they were likely less apprehensive about the simulation experience in general.

Overall, both cohorts had similar baseline results from the pre-test multiple-choice questionnaire, which suggests that baseline knowledge did not impact the results. Furthermore, both cohorts had statistically significant improvement from the pre-test to post-test MCQ results. This lends to the fact that the exercise had a positive impact on student learning.

Strengths and limitations

The major benefit of the cross-over design in this cohort is that it allowed within-group comparison, which would not have been possible in a conventional parallel-group design. In addition, this allowed all students to receive the educational intervention as part of the simulation experience. While a control group that did not receive the educational intervention in any respect would have been optimal to understand the exact impact of the educational intervention, regardless of its temporal placement, this was not ethically possible as every medical student needed to have the same amount of instruction during the SPE day.

There are several limitations to the study that we felt were worth noting. Our study used a crossover-type design for the intervention, rather than one group receiving the intervention and one no-intervention group. Although a true control group may provide a stronger argument, this design was used in an attempt to provide all the students with the same overall learning experience. Additionally, the simulated patient scenarios may have differed depending on the order in which the students' gathered information and asked questions. Although the operators of the case scenarios underwent training to standardize the experience, the presentation of the cases may have differed slightly between the groups from intrapersonal and interpersonal differences between the two operators running each simulation case. Despite simulation rooms being standardized for patient positioning and equipment necessary for the experience, there was a slight variation in the overall atmosphere. This difference included fixed structural variations of the rooms as they were built as either an operating room, a labor and delivery room, an emergency department room, or an intensive care unit room, which may have impacted how students approached the simulated patient. Finally, the SPEs in our study were formative, non-graded activities. As such, this may have impacted students' engagement in the SPEs and therefore their performance.

Conclusions

Our study showed that a simulated patient encounter platform in combination with a clinical reasoning framework is an effective method that can be used in medical education. Integration of succinct learning objectives with educational interventions improved diagnostic assessment and rates of correct diagnosis. Learners showed simulation performance improvement directly following the educational intervention regardless of whether the intervention was delivered before or in-between exercises. Most participants found that the peri-simulation education was beneficial to their learning. Similar investigations in other medical student clinical training exercises should be explored to improve the learning process in manikin-based simulation patient encounters.

Acknowledgements

We would like to give a special thanks to the following for their contributions to the study: Karla Dixon, the Lloyd A. Jacobs Interprofessional Immersive Simulation Center staff, and The University of Toledo College of Medicine and Life Sciences.

Conflicts of Interest

The authors declare that they have no conflict of interest.

Supplementary materials

Supplementary file 1

Appendix 1. Multiple-Choice Questionnaire (MCQ) pre-test and post-test (S1.pdf, 59 kb)Supplementary file 2

Appendix 2. Mean performance scores by COPD and Asthma case scenarios (S2.pdf, 51 kb)Supplementary file 3

Appendix 3. Likert Scale student feedback questionnaire (S3.pdf, 90 kb)References

- Holmboe ES. Competency-based medical education and the ghost of Kuhn: reflections on the messy and meaningful work of transformation. Acad Med. 2018; 93: 350-353.

Full Text PubMed - Alluri RK, Tsing P, Lee E and Napolitano J. A randomized controlled trial of high-fidelity simulation versus lecture-based education in preclinical medical students. Med Teach. 2016; 38: 404-409.

Full Text PubMed - Jorm C and Roberts C. Using complexity theory to guide medical school evaluations. Acad Med. 2018; 93: 399-405.

Full Text PubMed - Monrouxe LV, Bullock A, Gormley G, Kaufhold K, Kelly N, Roberts CE, Mattick K and Rees C. New graduate doctors' preparedness for practice: a multistakeholder, multicentre narrative study. BMJ Open. 2018; 8: 023146.

Full Text PubMed - Yun B, Su Q, Cai YT, Chen L, Qu CR and Han L. The effectiveness of different teaching methods on medical or nursing students: protocol for a systematic review and network meta-analysis. Medicine (Baltimore). 2020; 99: 21668.

Full Text PubMed - Croskerry P. From mindless to mindful practice--cognitive bias and clinical decision making. N Engl J Med. 2013; 368: 2445-2448.

Full Text PubMed - Guragai M and Mandal D. Five skills medical students should have. JNMA J Nepal Med Assoc. 2020; 58: 269-271.

Full Text PubMed - Brauer DG and Ferguson KJ. The integrated curriculum in medical education: AMEE Guide No. 96. Med Teach. 2015; 37: 312-322.

Full Text PubMed - Wijnen-Meijer M, van den Broek S, Koens F and Ten Cate O. Vertical integration in medical education: the broader perspective. BMC Med Educ. 2020; 20: 509.

Full Text PubMed - Norman G. Teaching basic science to optimize transfer. Med Teach. 2009; 31: 807-811.

Full Text PubMed - Meerdink M and Khan J. Comparison of the use of manikins and simulated patients in a multidisciplinary in situ medical simulation program for healthcare professionals in the United Kingdom. J Educ Eval Health Prof. 2021; 18: 8.

Full Text PubMed - Herbstreit F, Merse S, Schnell R, Noack M, Dirkmann D, Besuch A and Peters J. Impact of standardized patients on the training of medical students to manage emergencies. Medicine (Baltimore). 2017; 96: 5933.

Full Text PubMed - Alsaad AA, Davuluri S, Bhide VY, Lannen AM and Maniaci MJ. Assessing the performance and satisfaction of medical residents utilizing standardized patient versus mannequin-simulated training. Adv Med Educ Pract. 2017; 8: 481-486.

Full Text PubMed - Ryall T, Judd BK and Gordon CJ. Simulation-based assessments in health professional education: a systematic review. J Multidiscip Healthc. 2016; 9: 69-82.

Full Text PubMed - Okuda Y, Bryson EO, DeMaria S, Jacobson L, Quinones J, Shen B and Levine AI. The utility of simulation in medical education: what is the evidence? Mt Sinai J Med. 2009; 76: 330-343.

Full Text PubMed - Paskins Z and Peile E. Final year medical students' views on simulation-based teaching: a comparison with the Best Evidence Medical Education Systematic Review. Med Teach. 2010; 32: 569-577.

Full Text PubMed - Schmidt-Huber M, Netzel J and Kiesewetter J. On the road to becoming a responsible leader: a simulation-based training approach for final year medical students. GMS J Med Educ. 2017; 34: 34.

Full Text PubMed - El Naggar MA and Almaeen AH. Students' perception towards medical-simulation training as a method for clinical teaching. J Pak Med Assoc. 2020; 70: 618-623.

Full Text PubMed - Agha S, Alhamrani AY and Khan MA. Satisfaction of medical students with simulation based learning. Saudi Med J. 2015; 36: 731-736.

Full Text PubMed - Jayawickramarajah PT. How to evaluate educational programmes in the health professions. Med Teach. 1992; 14: 159-166.

Full Text PubMed - Al-Elq AH. Simulation-based medical teaching and learning. J Family Community Med. 2010; 17: 35-40.

Full Text PubMed - Batt-Rawden SA, Chisolm MS, Anton B and Flickinger TE. Teaching empathy to medical students: an updated, systematic review. Acad Med. 2013; 88: 1171-1177.

Full Text PubMed - Kim SS, Kaplowitz S and Johnston MV. The effects of physician empathy on patient satisfaction and compliance. Eval Health Prof. 2004; 27: 237-251.

Full Text PubMed - Vermeire E, Hearnshaw H, Van Royen P and Denekens J. Patient adherence to treatment: three decades of research. A comprehensive review. J Clin Pharm Ther. 2001; 26: 331-342.

Full Text PubMed - Di Blasi Z, Harkness E, Ernst E, Georgiou A and Kleijnen J. Influence of context effects on health outcomes: a systematic review. Lancet. 2001; 357: 757-762.

Full Text PubMed